Cloud Cost Optimization: Practical Strategies to Reduce Your AWS/GCP Bill Without Downgrading Performance

Did you know enterprises spend almost eighteen billion dollars yearly on unused or underutilized cloud resources across AWS, GCP, and Azure, accounting for nearly 30% of all cloud spending?

With an $18 billion fund, an enterprise could launch revolutionary products and services, expand into multiple new global markets, acquire smaller competitors, invest heavily in AI and sustainable technologies, or undertake large-scale infrastructure projects within a year.

It is time to bid adieu to wasting funds and find practical strategies to optimize cloud costs without compromising performance. The good news is that with the right approach and resources, you can maximize the benefits of cloud hosting by identifying waste before it occurs, reducing idle resources and overprovisioning, without interrupting your systems or performance.

Let us explore the proven strategies and best practices that help teams reduce cloud bills while maintaining fast service.

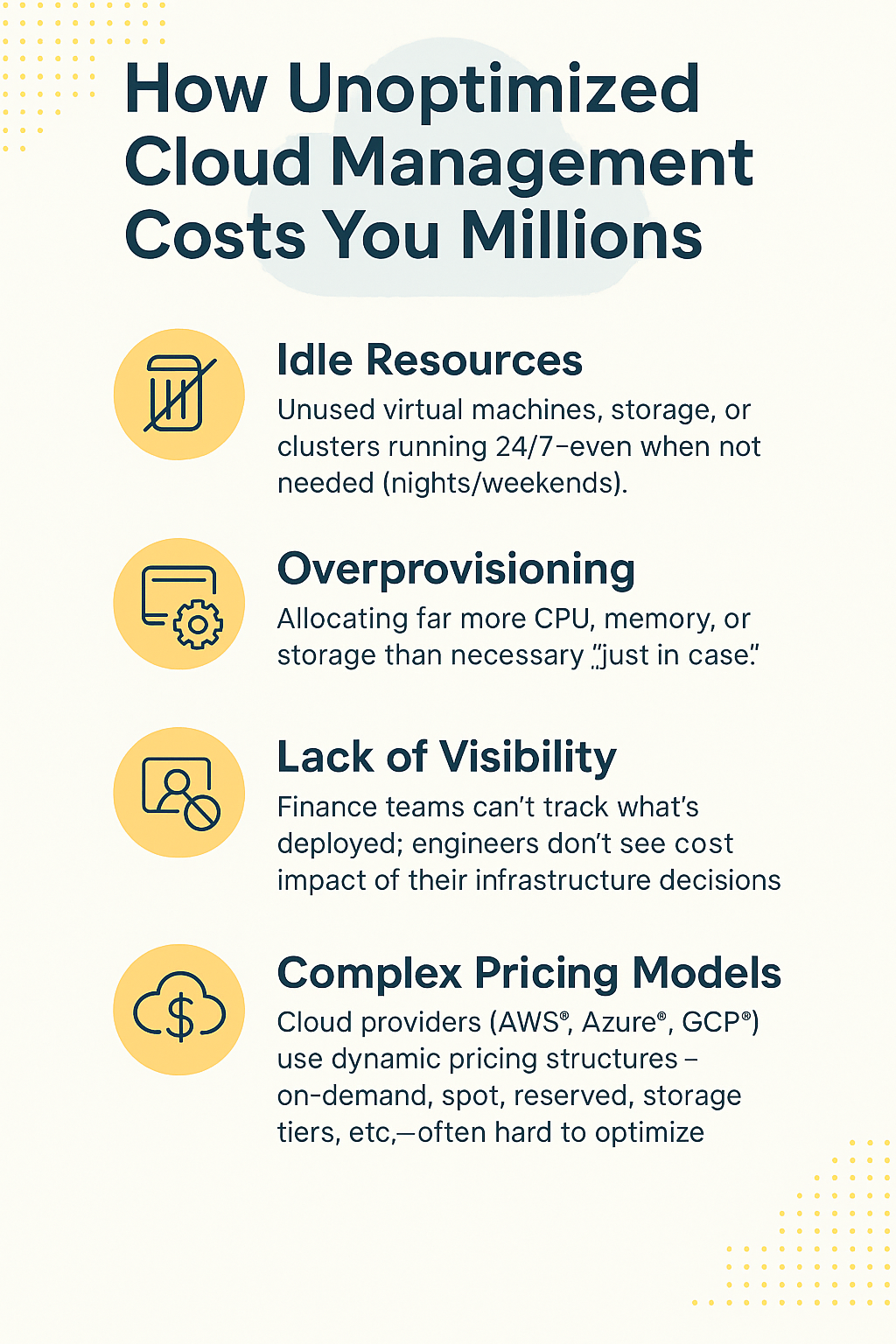

How Unoptimized Cloud Management Costs You Millions

You heard it right! Cloud waste is hidden. It arises from a combination of minor, overlooked issues, including idle resources, oversized deployments, poor communication, and confusing pricing models, which gradually pile up over time.

Here are the main reasons why it happens.

|

Issue |

Description |

Impact on Business |

|

Idle Resources |

Unused virtual machines, storage, or clusters running 24/7—even when not needed (nights/weekends). |

Wasted spend on non-utilized infrastructure (aka “zombie resources”). |

|

Overprovisioning |

Allocating far more CPU, memory, or storage than necessary “just in case.” |

Skyrocketing cloud bills resulting from overprovisioning are multiplied across workloads. |

|

Lack of Visibility |

Finance teams can’t track what’s deployed; engineers don’t see the cost impact of their infrastructure decisions. |

Poor accountability and budget overruns due to siloed communication. |

|

Complex Pricing Models |

Cloud providers (AWS, Azure, GCP) utilize dynamic pricing structures—such as on-demand, spot, reserved, and storage tiers—often making them difficult to optimize. |

Selecting the wrong model can unknowingly double or triple total cloud expenditure. |

Practical Strategies to Optimize Your Cloud Cost

Worry not; we consulted with industry experts to gather some insightful feedback on the best and practical ways to optimize cloud management for enterprises.

1. Rightsizing (Matching Resources to Actual Demand)

Rightsizing is like buying clothes that fit perfectly. A fit that is too big is a waste of money, and one that is too small is uncomfortable. However, with the perfect fit, you get the best value for your money.

One of the most effective ways to reduce cloud costs is to rightsize resources. It ensures that your cloud resources (such as servers, CPUs, and memory) align with the actual demand of your applications, rather than overpaying for capacity you don’t use.

For example, an m5.4xlarge EC2 instance on AWS (16 vCPUs, 64 GB RAM) costs several times more than an m5.large (2 vCPUs, 8 GB RAM). Yet, in many cases, the smaller system can handle the same workload, especially if usage is moderate and not constant.

According to research, rightsizing alone can reduce cloud expenses by up to 25% which is a massive cost saver, especially for organizations spending millions annually on AWS, GCP, or Azure.

How to Do Rightsizing Effectively

- Use Built-in Tools: Platforms like AWS Compute Optimizer and GCP Recommender automatically analyze your usage patterns to ensure they still meet your performance needs.

- Track Usage Over Time: Don’t just look at peak hours. Monitor CPU, memory, and disk utilization over several weeks to determine the actual average demand of your applications.

- Switch to Burstable Instances: If workloads have irregular spikes, move them to burstable instances (AWS T3/T4, GCP E2). They handle regular traffic cheaply and scale up briefly when demand rises.

- Separate Environments: Production systems that handle customers’ needs require powerful instances. But staging, development, or testing environments can thrive on smaller ones.

- Iterate, Don’t Guess: Rightsizing Isn’t a One-Time Approach. As apps evolve, demand changes. So, it is mandatory to re-check and adjust regularly.

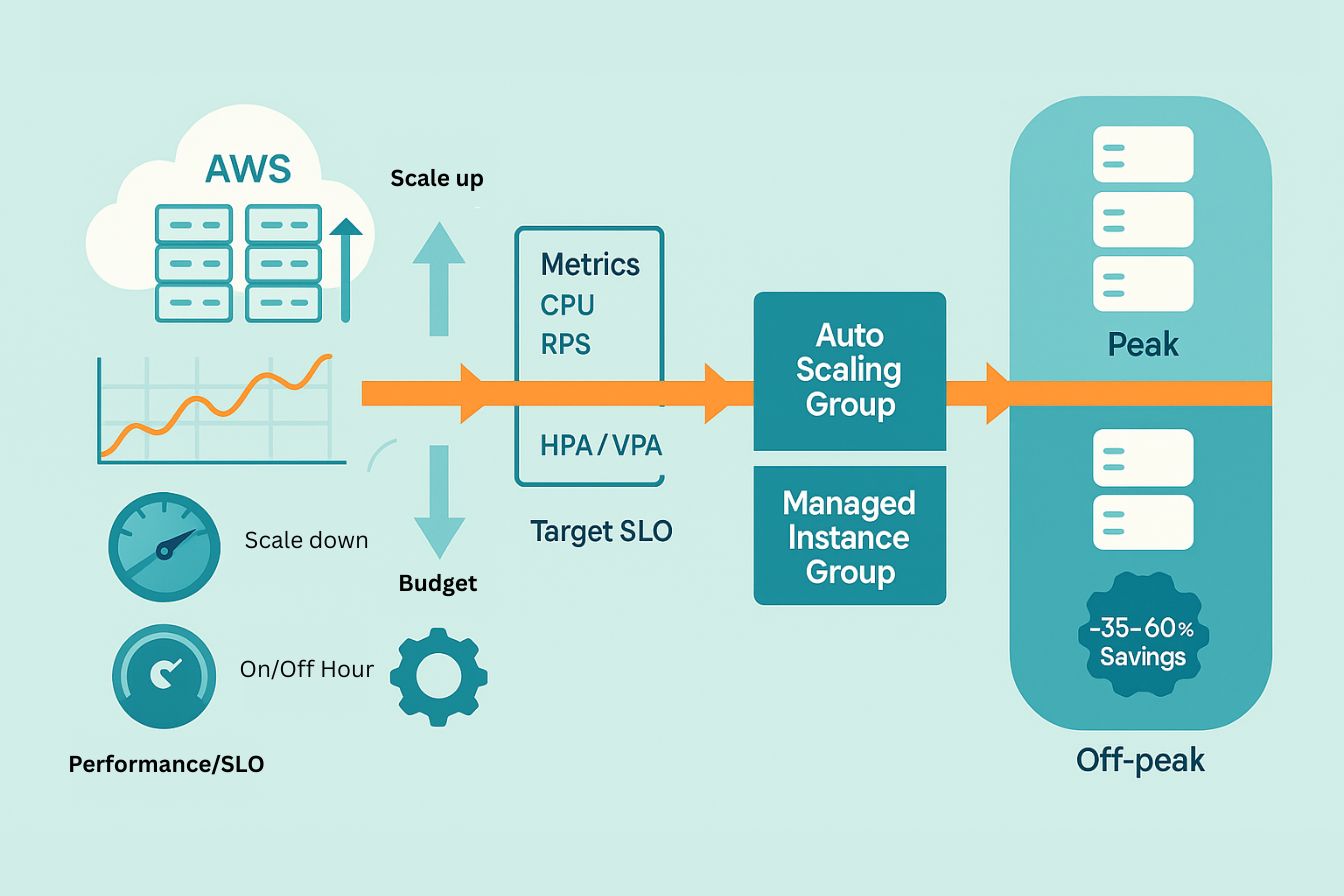

2. Embrace Autoscaling to Match Real-Time Demand

One of the biggest reasons companies overspend in the cloud is due to fear. Teams worry about sudden traffic spikes, so they keep extra servers running 24/7 to ensure performance; however, this also incurs additional costs.

This is where autoscaling comes in. Instead of paying for unused capacity, autoscaling automatically adjusts your resources to match real-time demand.

For example, with AWS Auto Scaling or GCP Instance Group Autoscaler, your application can scale up or down based on live traffic and usage.

How to Get Autoscaling Right

- Define Scaling Policies: Use clear rules for when to add or remove instances (CPU usage > 70%, memory > 80%, etc.).

- Set Upper and Lower Limits: Prevent runaway costs by capping the maximum limit and ensuring a stable minimum.

- Load Balancing: Pair autoscaling with load balancers so incoming requests are evenly distributed.

- Test Before Peak Events: Simulate traffic spikes (such as product launches or seasonal sales) to ensure that scaling rules are activated correctly.

- Use Predictive Scaling: AWS and GCP now offer machine learning–based autoscaling that forecasts demand before it spikes, reducing lag.

- Monitor Regularly: Scaling rules that worked last year may not be effective for today’s traffic. Adjust them as patterns change.

3. Use Reserved Instances and Committed Use Discounts

If your workloads run consistently, such as databases, backend services, or applications that operate 24/7, paying on-demand pricing can be the most expensive way to utilize the cloud.

These workloads don’t require sudden flexibility, but instead cost efficiency. It is where Reserved Instances (RIs) in AWS and Committed Use Discounts (CUDs) in GCP come into play.

By committing to use specific resources for a 1– to 3–year term, you trade flexibility for significant savings.

- AWS Reserved Instances can cut costs by up to 72% compared to on-demand pricing.

- GCP Committed Use Discounts offer up to 57% savings for predictable workloads.

For example, if a company runs 20 m5.Large EC2 instances on demand year-round; switching them to Reserved Instances could slash its annual cloud bill by nearly half without changing performance or architecture.

Best Practices for Using RIs and CUDs

- Identify Steady Workloads: Databases, backend APIs, and core apps that run nonstop are the best candidates.

- Mix and Match: Use RIs/CUDs for steady workloads and Spot/Preemptible instances for flexible or short-term jobs.

- Choose Term Length Wisely: A 3-year term offers the maximum discounts, but a 1-year term provides greater flexibility.

- Monitor Utilization: Unused reserved capacity is a waste of money. Track usage to ensure commitments match actual demand.

- Plan for Growth: Use forecasting tools to avoid under- or over-committing.

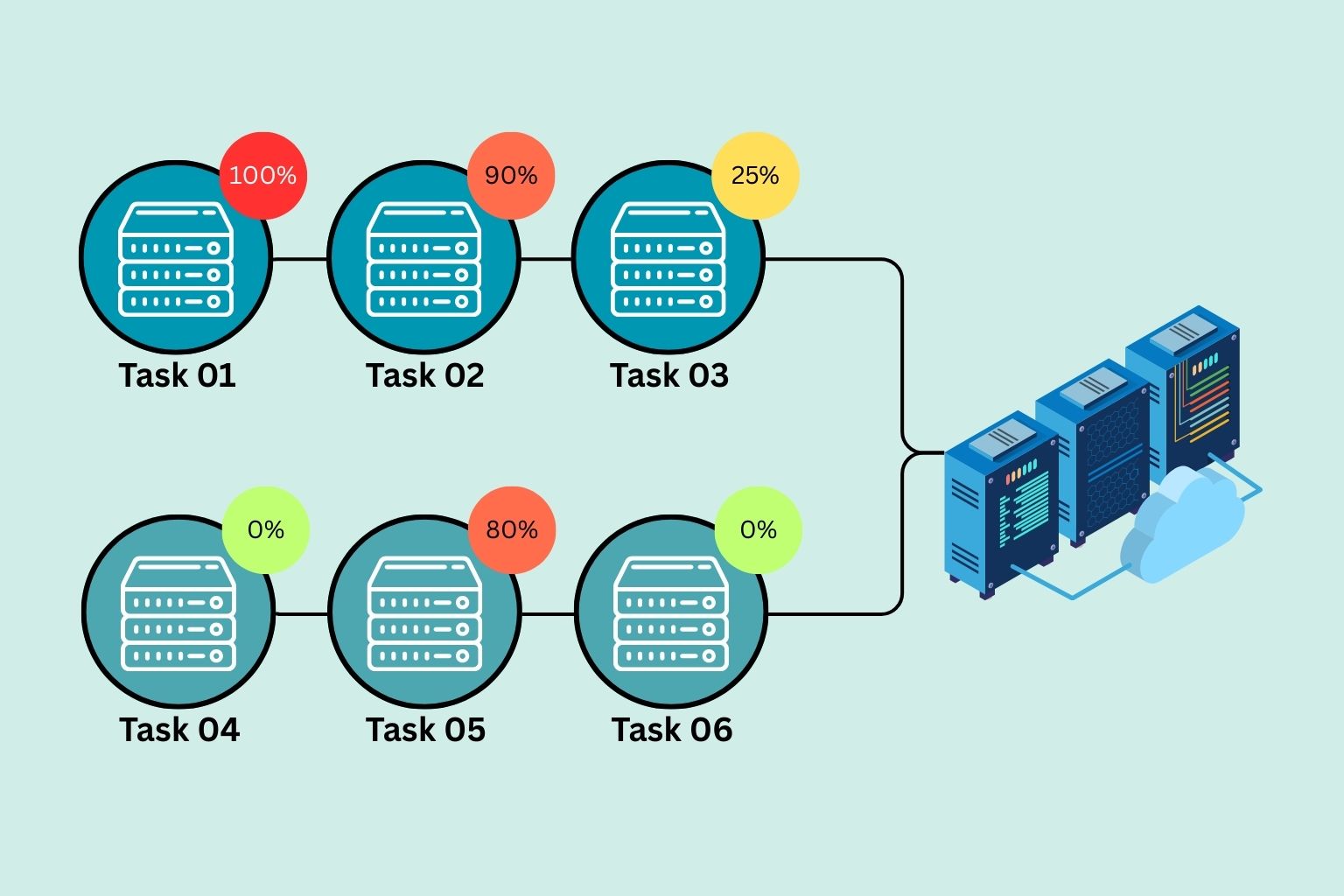

4. Harness Spot for Non-Critical Workloads

Not every workload needs guaranteed uptime. For tasks that can be paused or restarted without harming users, AWS Spot Instances and GCP Preemptible VMs offer significant savings.

Perfect Use Cases for Spot/Preemptible Instances

- Data Analytics → Crunching large datasets where jobs can resume after interruption.

- Batch Processing → Rendering, simulations, or big jobs that run in chunks.

- Machine Learning Training → Training models where interruptions don’t affect results.

- CI/CD Pipelines → Continuous integration/testing tasks that can easily restart.

A famous example is Netflix, which uses AWS Spot Instances for video transcoding. By offloading this massive workload to a cheap, interruptible setting, Netflix saves millions of dollars every year.

How to Use Spot/Preemptible Instances Effectively

|

Strategy |

Description |

Pricing Model / Cost Insight |

|

Automate Recovery |

Use AWS Auto Scaling, GCP Managed Instance Groups, or Kubernetes to automatically reschedule tasks that are interrupted. |

AWS Auto Scaling—no additional fee for the service; you pay only for underlying EC2, CloudWatch, etc. (Sedai, Medium, Amazon Web Services, Inc.). GCP MIGs—no extra charge beyond VM usage. (Jayendra's Cloud Certification Blog). Kubernetes (e.g., GKE/EKS)—control plane management fees may apply; see the row below. |

|

Mix with On-Demand + Spot |

Run mission-critical workloads on demand or Reserve, while flexible tasks utilize spot/Preemptible instances for cost savings. |

AWS Spot Instances—up to 90% discount compared to On-Demand; e.g., m4.xlarge Spot ≈ $0.02–0.03/hr vs. $0.10/hr On‑Demand. (Sedai). |

|

Diversify Instance Types |

Spread workloads across different instance types and regions to mitigate capacity shortages. |

No inherent cost—this strategy optimizes availability and resilience, particularly when paired with Autoscaling and Spot fallback strategies. |

|

Test Resilience First |

Simulate interruptions (e.g., preemption) in testing environments to ensure workloads can handle failures gracefully. |

Testing itself doesn’t add direct costs; however, it helps avoid expensive downtime and improves reliability. |

|

Managed Kubernetes Control Plane |

Run container workloads with managed Kubernetes services. |

EKS (AWS)—$0.10/hr (~$72/month) per cluster for management plane. (Sedai). GKE (GCP)—$0.10/hr per cluster for multi-zonal; the first zonal cluster is free. (Spot.io). AKS (Azure)—control plane free; pay only for node resources. (Microsoft Azure). |

5. Optimize Cloud Storage

Storage is one of the most significant hidden costs in the cloud. Many enterprises unknowingly store rarely accessed data on expensive, high-performance storage, paying premium rates for files that are used only occasionally. Over time, it creates a silent drain on budgets.

The key is to align data value with the storage class. For example,

- AWS S3 Intelligent Tiering automatically shifts files between frequent-access and infrequent-access tiers.

- GCP Cloud Storage Nearline/Coldline offers storage that is up to 90% cheaper than standard tiers, making it ideal for backups and archives.

Standard Storage Mistakes That Waste Money

- Keeping old log files in high-performance SSDs instead of cheap archival storage.

- Forgetting about old snapshots and unused volumes left behind after data is deleted.

- Paying for duplicate backups or data that is never accessed.

Best Practices to Optimize Storage Costs

- Classify Data by Usage- Hot data (frequently used) on SSDs; warm/cold data (rarely used) on cheaper tiers.

- Automate Tiering - Enable lifecycle policies in AWS S3 or GCP Cloud Storage to automatically move files to lower-cost tiers.

- Audit Regularly- Run monthly cleanups to delete unattached volumes, stale snapshots, and redundant files.

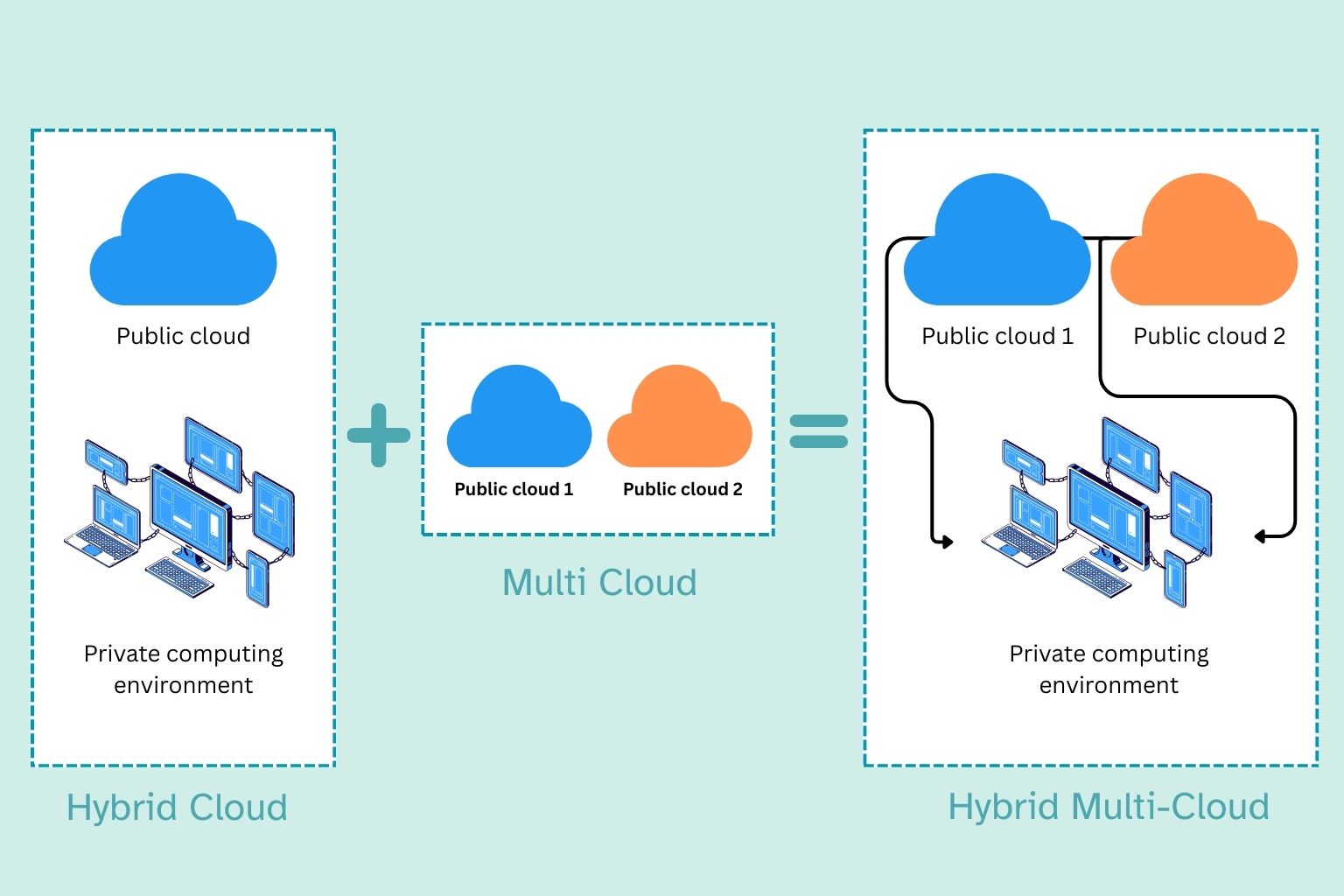

6. Adopt a Multi-Cloud and Hybrid Strategy Carefully

The idea of going multi-cloud or using more than one cloud provider sounds like a smart cost-saving move.

However, in reality, it can be a double-edged sword. Done well, it allows you to leverage each provider’s strengths. Done poorly, it adds complexity, kills volume discounts, and creates integration headaches.

Best Practices for Multi-Cloud & Hybrid Cost Efficiency

- Don’t Spread Too Much - Splitting workloads across too many providers can cause you to lose volume discounts (like AWS Reserved pricing or GCP commitments).

- Simplify Integrations - Adding too many clouds can increase operational overhead, such as APIs, billing, and management tools. Keep it simple.

- Plan Data Movement Carefully - Transferring data between clouds often incurs egress fees, which can wipe out savings if not considered.

7. Build a Culture of FinOps

FinOps stands for Finance and DevOps. It is the practice of holding engineers accountable for both costs and performance.

Cost optimization isn’t just about tools; it is about upholding a culture of cost efficiency throughout the enterprise.

Best Practices for Cost Savings

- Share cost reports with every engineering team weekly.

- Set budgets and alerts for projects.

- Reward teams that maintain high performance while reducing costs.

Conclusion

In the cloud, savings don’t come from spending less—they come from spending smart.

By rightsizing, automating, leveraging discounts, and building a FinOps culture, companies can save millions while maintaining strong performance.

So, are you ready to balance your cloud bill? Please keep it simple and find the tech partner that offers cloud project management and optimization for enterprises in the US.

Related Post

RECOMMENDED POSTS

API-Centric Architecture: The Present & Future of SaaS

06, Feb, 2026

RECOMMENDED TOPICS

TAGS

- artificial intelligence

- agentic ai

- ai

- machine learning

- deepseek

- llm

- saas

- growth engineering

- ai/ml

- chatgpt

- data science

- gpt

- openai

- ai development

- gcp

- sql query

- data isolation

- db expert

- database optimize

- customer expectation

- sales growth

- cloud management

- cloud storage

- cloud optimization

- aws

- deep learning

- modular saas

- social media

- social media marketing

- social influencers

- api

- application

- python

- software engineering

- scalable architecture

- api based architecture

- mobile development

- bpa

- climate change

- llm models

- leadership

- it development

- empathy

- static data

- dynamic data

- ai model

- open source

- xai

- qwenlm

- database management

- automation

- healthcare

- modern medicine

- growth hacks

- data roles

- data analyst

- data scientist

- data engineer

- data visualization

- productivity

- artificial intelligene

- test

ABOUT

Stay ahead in the world of technology with Iowa4Tech.com! Explore the latest trends in AI, software development, cybersecurity, and emerging tech, along with expert insights and industry updates.

Comments(0)

Leave a Reply

Your email address will not be published. Required fields are marked *